I stumbled upon an interesting issue today when checking on the index status of a new WordPress site I'd launched.

I'd received a notification from Search Console that my pages weren't indexed due to a noindex tag, and as I'd resolved that a few days ago I thought I'd double check if I had missed any pages.

All pages were still showing as noindexed - and whilst I thought it was probably just a delay in the reporting, I thought I would dig around just to double-check.

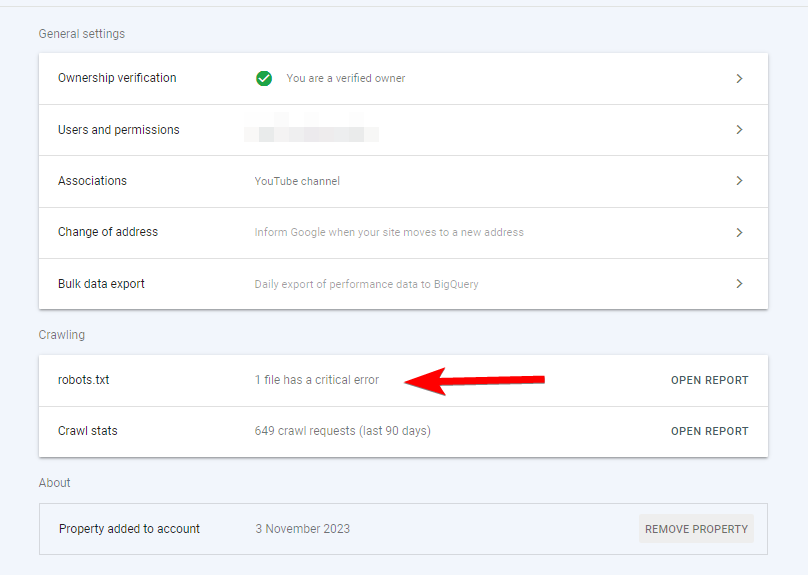

Opening up Crawl Stats all looked fine, but when on the Settings menu I had noticed a critical error under the robots.txt status.

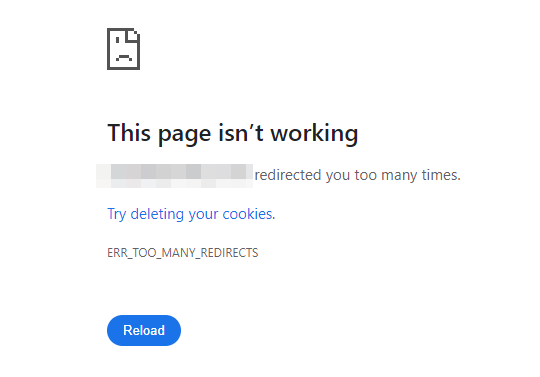

From the robots.txt menu I could see the status of "Not fetched – Redirect error". Opening the robots file in my browser gave me the same - I was stuck in a redirect loop.

I did the usual checks, and also went into Yoast's settings to create the robots.txt (I thought maybe this could fix it) but this didn't seem to change anything.

How to fix the robots.txt redirect loop on WordPress

As any half-decent SEO might do at this stage, I took to Google to find an answer.

One of the results which caught my eye was a Cloudflare thread from another SEO I recognised.

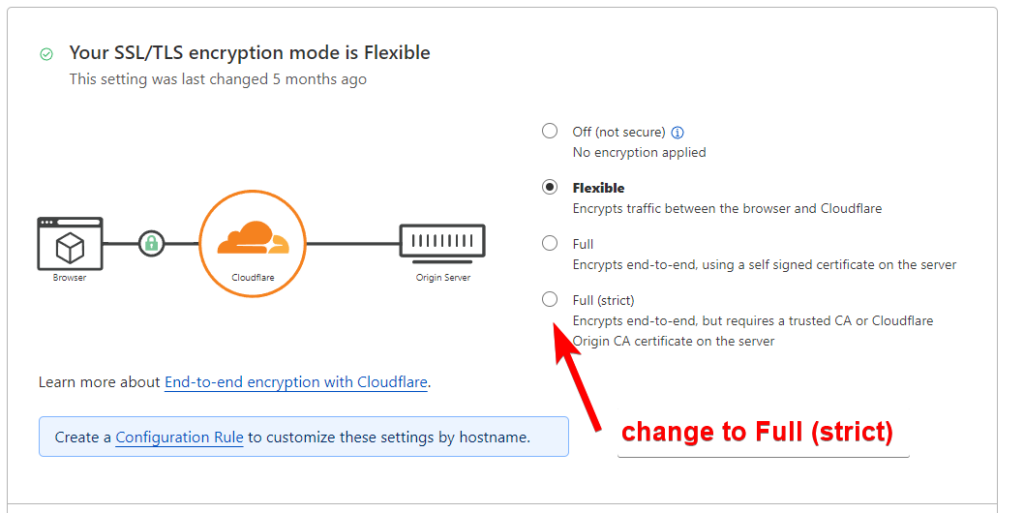

They had been asking about the robots.txt loop just a few days ago, and a Cloudflare team member suggested that they switch their SSL certificate settings within Cloudflare to be Full (strict) as opposed to being Flexible.

After just a few moments I was then able to load the robots.txt in my browser successfully.

Word of caution: if changing the SSL/TLS encryption mode setting you might want to double check that it's not broken anything else on the website!

How many sites are impacted by this?

I don't know the answer here but from some of the sites I have on WordPress that are also using Cloudflare's free service plan, I've found more than one site that's been affected by this.

Whilst the issue is quick to diagnose and fix, it's definitely one you would want to get sorted as soon as you can.

I've had issues with another website that actually dropped out of Google's index after a few months of neglect (WordPress/plugins/the theme weren't being kept up to date) and this caused a 5xx error on the robots.txt file, which lead to Google "dropping" the website from their results.

After I updated things and sorted out the theme issue, the robots.txt loaded fine again and Google was very quick to re-index - within a matter of hours. But the site had been out of their index for several weeks!

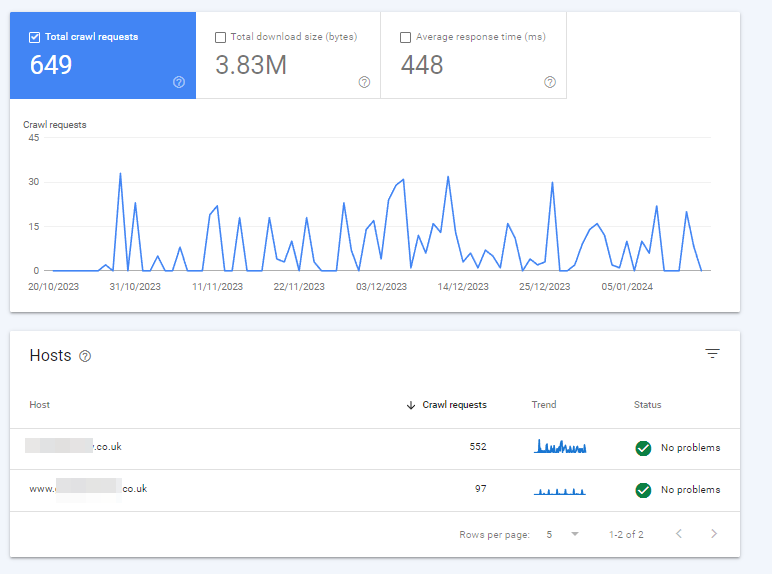

With the site that had this Cloudflare issue above, I did check the Crawl Stats report in GSC but I didn't notice a drop in visits since the robots redirect loop problem.

My guess is that it would take a while for Googlebot to drop a site from their index due to an inaccessible robots file, but I wouldn't like to risk that!