Posting this publicly in the hope that it helps anyone that has struggled with this particular problem - it's not one that I've seen posted about very often within the SEO community.

I've been working with a new client that has a canonical domain at https://www.website.com, which I've had access to as a URL prefix within Google Search Console.

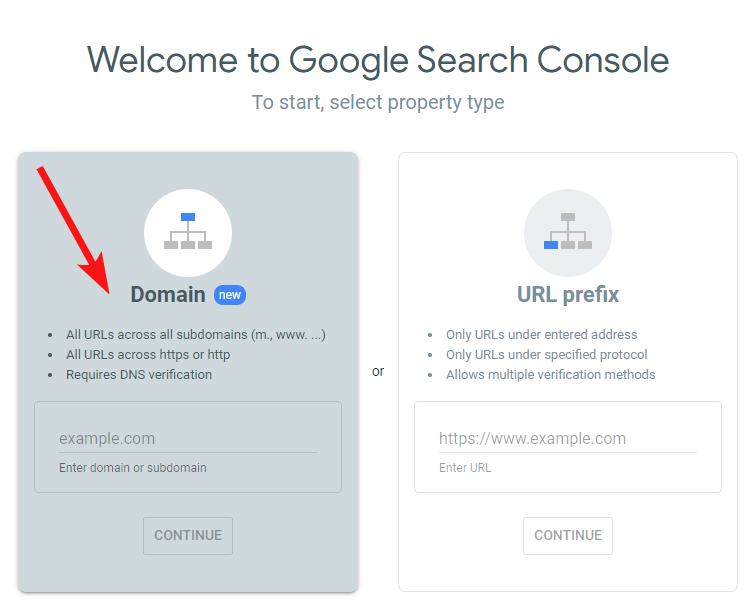

I realised that the client has a few old subdomains floating around, many of which I wasn't aware of, and so due to some technical difficulties I figured it would be wise to suggest getting a Domain property setup in Search Console so I can have as much insight into their organic setup as possible.

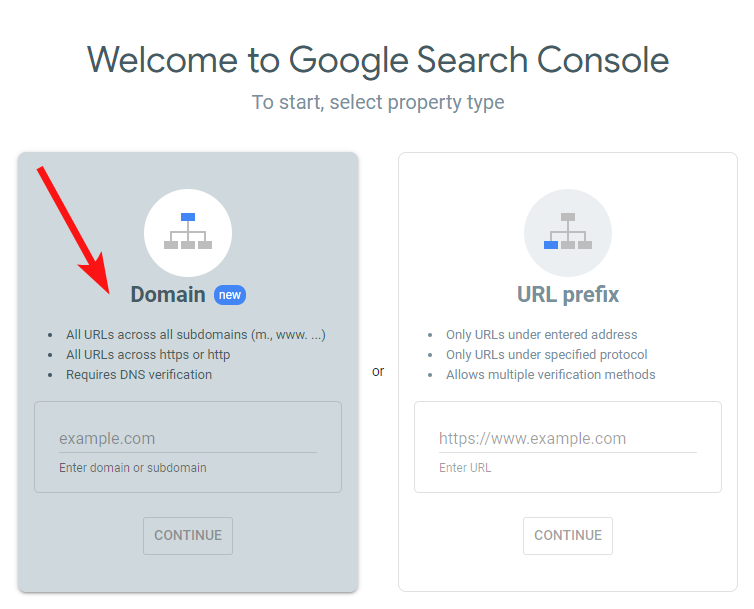

A Domain property type looks great (on paper, at least) because it should aggregate data from each site variant/subdomain, of the overarching domain. So it will include the HTTPS, non-HTTPS, WWW and non-WWW variants of a site, plus any subdomains.

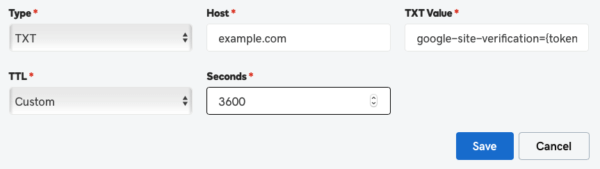

Verification here is trickier as it needs a txt code to be uploaded at the DNS level - so might need a bit of hand-holding with your client, if not just a simple dev request.

Finding Missing Data in your Domain Set

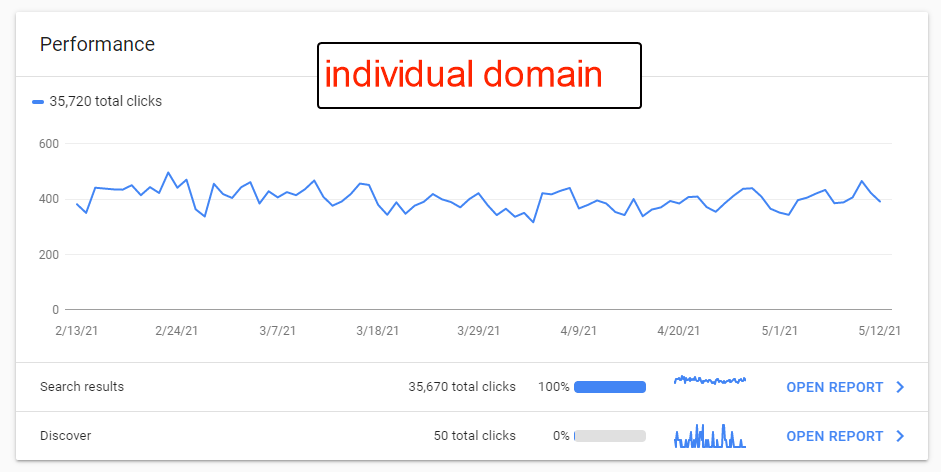

When I did get access to the Domain set in Search Console one of my first checks was to compare the total Search clicks for the domain set vs the individual URL prefix.

Checking this was quite a surprise - I'd expect the Domain set figure to be higher than that of the URL prefix, but the reverse was true. There was about 8K fewer clicks on the Domain set, compared to the URL prefix.

How to Diagnose Missing Data in a Domain set?

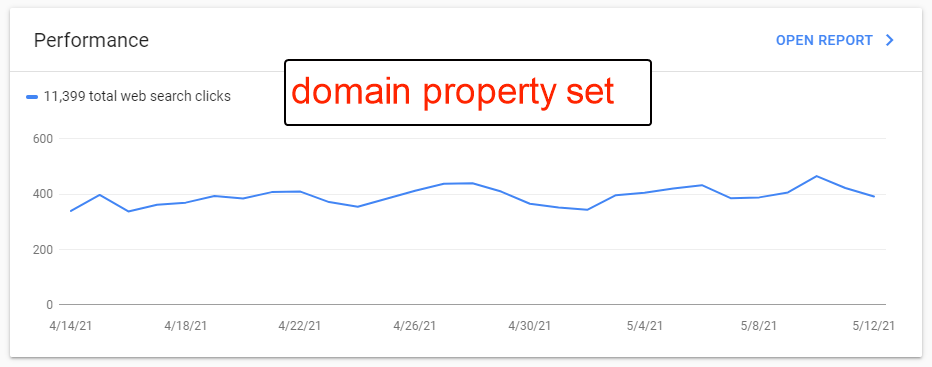

The only way that I could spot this issue in more clear light was when focusing on the time-graph period shown in the Search Performance graph. And this actually had to be pointed out to me from another SEO (Briony Cullin) who I shared the screenshot with in a private Slack group.

Reviewing these dates, even highlighting the data points with my cursor, I was able to follow along and check the dates were valid. In my case although I had selected data from the last 16 months, I was only seeing data from the current year (2021) visually on the graph. This was my data hole. See if you can spot it below (click to view the animated GIF).

This isn't the first time that I've seen a fairly significant difference between clicks for a domain set, so I do have a little bit of history here.

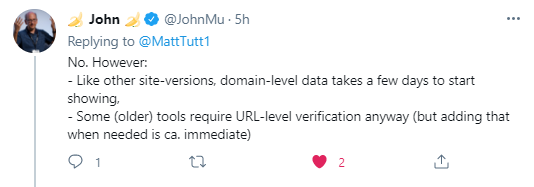

Asking on the Google webmaster forums, and on Reddit, Google's John Mueller was happy to give his thoughts and advised that it could be a hole in the data, due to a lost verification.

Others said I need to wait a few days for the data to collect, but this didn't solve it. So John's answer seemed to fit best.

Losing verification at the DNS level - how?

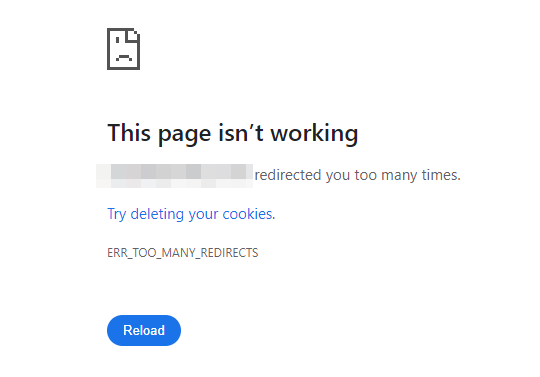

The thing that I couldn't really figure out is how verification could be lost at the DNS level? In hindsight I'm realising that if a web host goes down for a period of time, or someone makes a change to the DNS config and removes the txt record, or even if the domain gets passed to a new registrar - maybe these are all valid scenarios.

I understood that verification was easy to lose for a URL prefix as it's happened lots before - web dev's might remove the HTML verification file from a Production server, or the meta tag gets removed from a site (eg within the Yoast plugin settings), etc. I've had that happen a lot but it's fairly easy to resolve, and easy to pinpoint.

This particular issue had me scratching my head (more than usual) but in retrospect it's still possible, from the reasoning highlighted earlier. I think this is the main takeaway (for me anyway); that domain property sets can have verification lost. But it might not be so easy for you to spot this has happened.

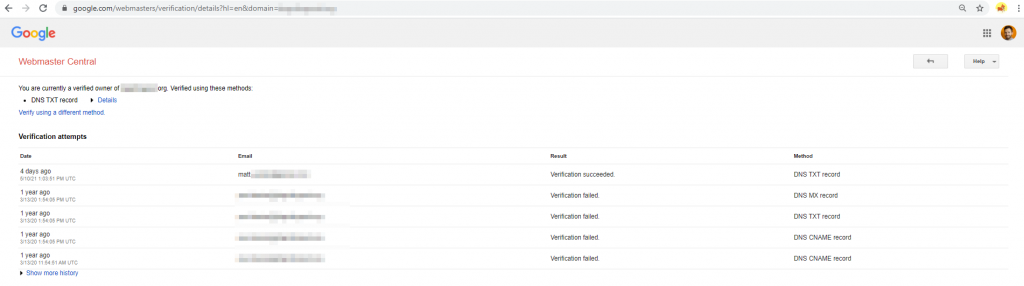

Determining when verification was lost - if at all?

For me the only way I could finally understand (and visualise) the issue is when a helpful member of the Google SEO forums pointed me to a particular link that has more details on verification details of properties owned within Search Console - at this link: https://www.google.com/webmasters/verification/home?hl=en

From this page I selected the relevant domain set, and could see the previous verification issues, confirming the lengthy periods the domain set had lost verification.

My problem here was two-fold:

a) I didn't realise (accept?) that domain set verification could be lost at the DNS level - I felt it was pretty safe/untouchable, and

b) I was unaware that this particular client had already claimed and verified the domain set within Search Console.

To me, Domain Sets are still a fairly new thing within Google Search Console. And a lot of new features have been rolled out and integrated over the past 12 months or so. Even the GSC screenshot shows that domain sets are labelled as being "New" (at which point will they stop being new?) but they've actually been around since February 2019. So it was a surprise that this client had set it up - I was assuming they hadn't engaged with an SEO provider until now. So - one to remember, for the future!

I also wanted to mention how helpful John Mueller was - I don't like to bug him on Twitter where he's always busy helping everyone with their own SEO problems, but he was kind enough to confirm what I later realised to be the issue. As you might tell from the back and forth on Twitter, I was still in a bit of a pickle. So don't laugh at all of my desperate questions (please)!.

So in summary - treat domain sets the same as individual properties, bookmark this link for future reference, and don't assume a client (or their previous agency) hasn't already got their s*** sorted when it comes to making use of Domain Sets! Unfortunately there's no way to get back this lost data, so as a work around you'll probably want to ensure you have URL prefix versions setup for any key URLs too, just in case.