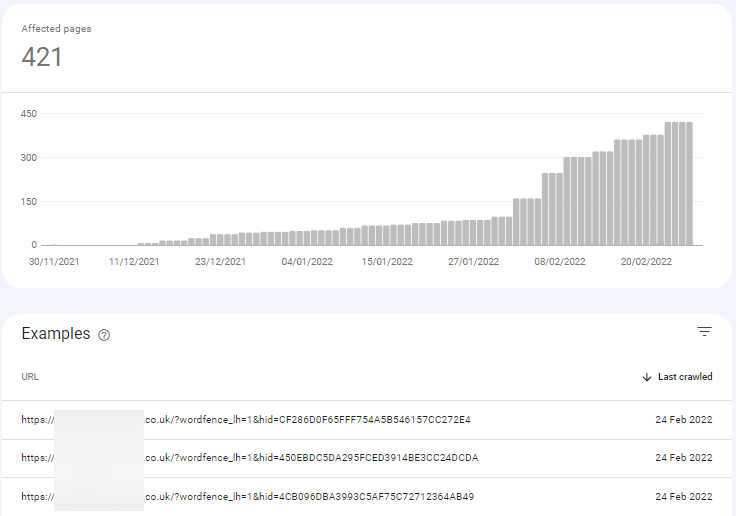

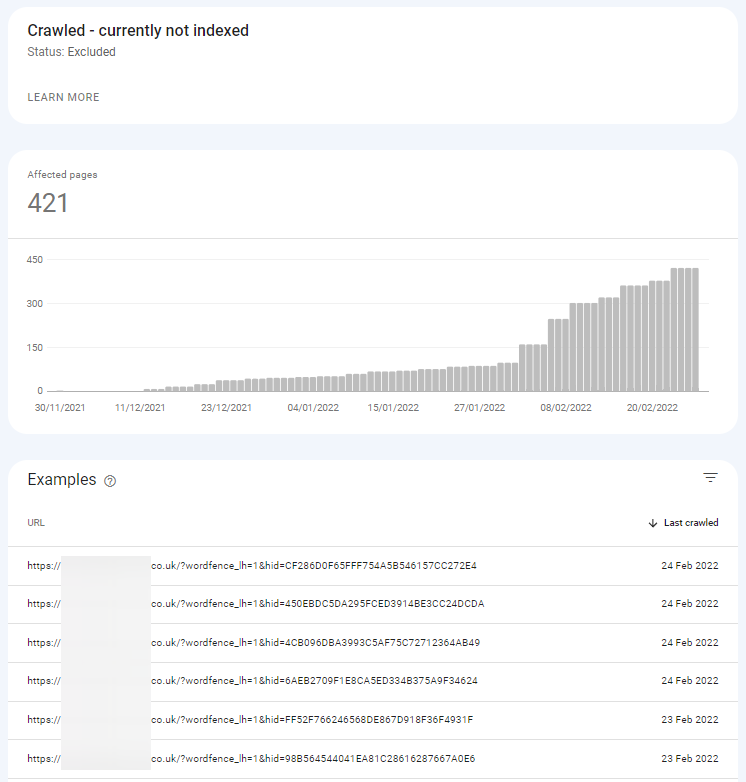

For a few websites I have added in Google Search Console, some of them my own side projects, some of them client sites, I've noticed a recent uptick in "Crawled - currently not indexed" errors in Search Console.

Looking at this issue in a bit more depth there was one repeat offender - apparently caused by the Wordfence security plugin for WordPress.

Whilst I don't want to throw Wordfence under the bus - perhaps it's not their fault - I'd like to understand why this is happening and to prevent it from continuing.

Why are these Wordfence pages an issue? Are they an SEO issue?

When I'm opening up Search Console and checking for any kind of technical SEO issues, often reviewing the "Coverage issues" report, I prefer not to have a relatively large numbers of errors reported - I want to cut the noise and only have the important things visible.

Whilst this Wordfence problem may be a bit of a non-issue (which I'll cover shortly), it could easily be obscuring actual issues that may be a bit buried within the GSC report. As an SEO specialist I want to make my life easier going forward. So I want these issues gone.

What is the problem with Wordfence and these URLs?

Just to clarify I'm 100% not a developer and all of the below will be based on my own research (aka searching on Google to fix the Wordfence issue).

I'm only writing this article up as A) it will motivate me to solve this nagging problem once and for all (I hope) and B) it should help speed up the time it takes others to fix the same issue.

Note - if you would rather hire an SEO consultant like me to help fix this issue for you - just get in touch and I'd be happy to deal with it for you. No win, no fee!

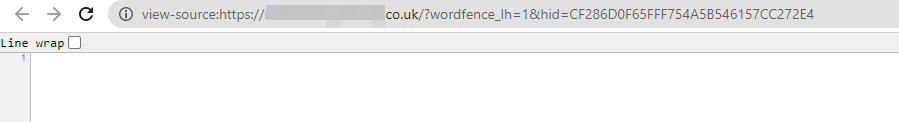

On WordPress the Wordfence auto-generated page URL's take the following format:

https://domain.com/?wordfence_1h=1&hid=JF352D0F65FFF754A5B546157CC272E4

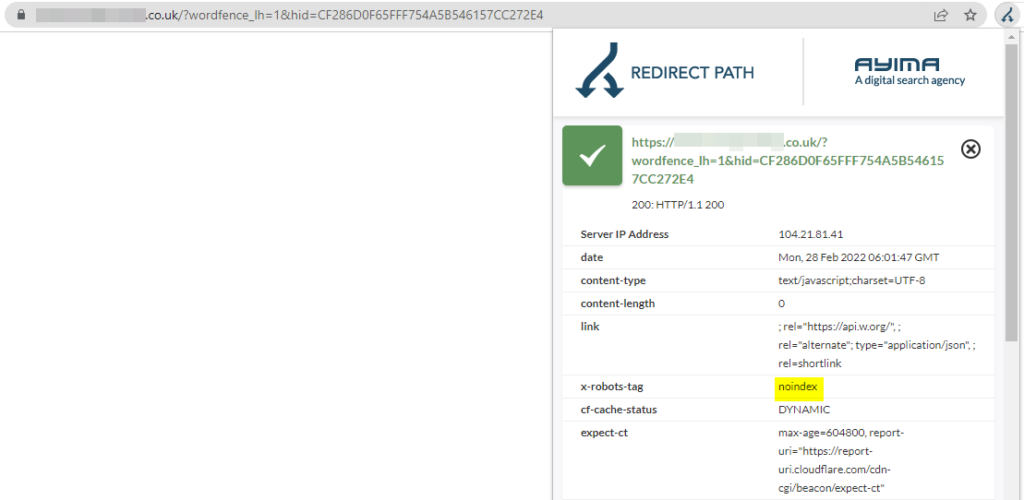

This is a parameter based URL - and the page is completely empty.

From the SEO extension I use, note the x-robots tag is set to be noindexed. So in theory it shouldn't get into Google's index...

For all intents and purposes this page should never really be generated, let alone have Google's grubby mits all over it.

What if you have a very large site (a million plus URL's) - does this mean there could be ten's of millions of Wordfence generated URL's? I don't know. But I do know that my site, consisting of a fairly measly 10 pages, now has over 400 of these Wordfence pages.

If I'm focusing on getting new quality content out there I really want to ensure I'm giving Googlebot my best possible content, not loads and loads of empty pages to sift through pointlessly.

Why does Wordfence generate these blank pages?

Because they like to keep us on our toes! (maybe?)

So the Wordfence query string URL's are actually used by the Live Traffic tool.

This is a tool to show you what is happening on your site in real-time. From the Wordfence website:

"Wordfence logs your traffic at the server level which means it includes data that JavaScript-based packages like Google Analytics do not show you. As an example, Live Traffic shows you visits from Google’s crawlers, Bing’s crawlers, hack attempts, and other visits that do not execute JavaScript. Typically Google and other analytics packages will only show you visits from web browsers that are operated by a human."

Taken from the Wordfence website - https://www.wordfence.com/help/tools/live-traffic/

How to disable Live Traffic by Wordfence - 2 options

It does seem fairly easy to disable or tweak this feature, which might end up saving your server resources and prevent the Wordfence page generation issue without sacrificing the security of your website.

There actually seem to be 2 options available to us here. Either we can reduce the consumption of the feature (by limiting it to check for security related traffic vs checking for all traffic) or we can try and disable the feature entirely.

Option 1 - Adjusting the Wordfence plugin options

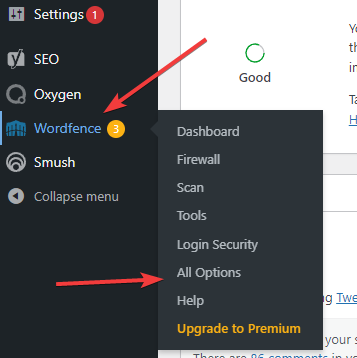

Step 1:

Simply login to the WordPress dashboard of the affected site, hover over the Wordfence item and select All Options.

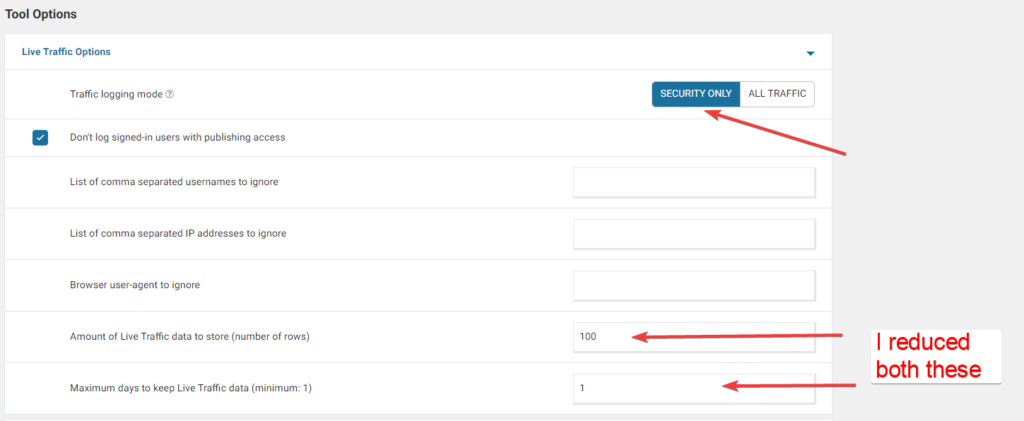

Step 2:

Scroll down to the bottom of the page and open the Live Traffic Options item.

Switch to Security Only as the Traffic logging mode, and then change the Amount of Life Traffic data to store (number of rows) to a more sensible range (I chose 100) and reduce Maximum days to keep Live Traffic data (minimum: 1) down to 1.

I did that just to prevent some of the bloat, if it even helps.

Apparently there used to be an option to turn off the Live Traffic feature completely, but in the version of Wordfence I have this doesn't seem possible. I'm on the free plan.

Make sure you hit "Save changes" otherwise all your edits will be lost!

Option 2 - Disabling Live Traffic within wp-config.php

This was harder to find than I'd hoped - there's an advanced help section on the Wordfence website where they explain how to disable the live traffic feature entirely, by editing the wp-config.php file.

"The Live Traffic feature can be disabled either on the “Live Traffic” tool page or the “All Options” page by setting the traffic logging mode to SECURITY ONLY. However, if you need to stop other admins from enabling it then you can set this constant. This can be helpful for developers who have clients using slow hosts or if the client has an admin account and might turn on Live Traffic."

Wordfence's Live Traffic page - https://www.wordfence.com/help/advanced/constants/

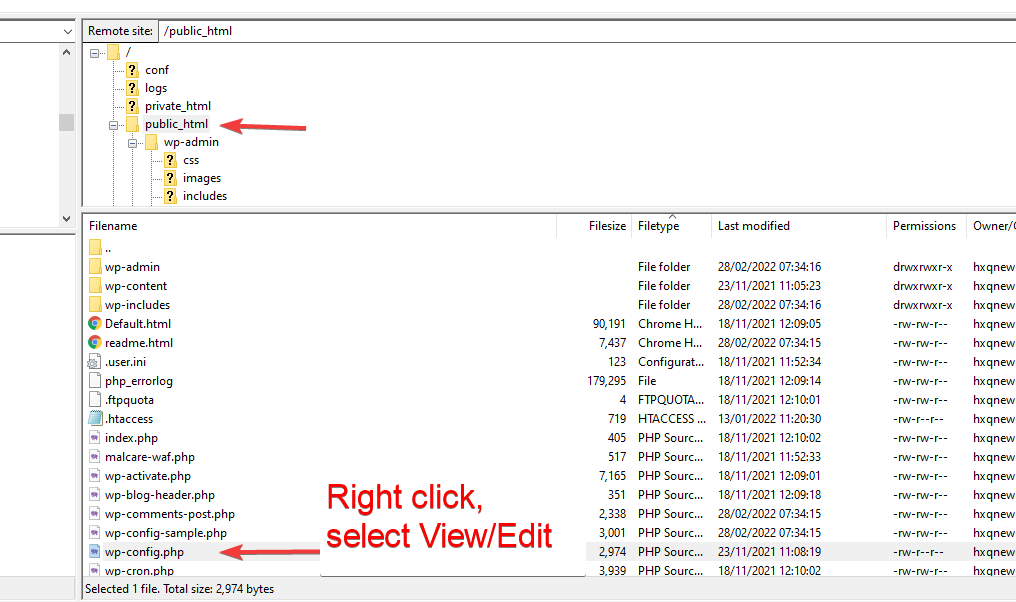

If you're handy with FTP access you can usually find the wp-config.php file in the root of your website's Public HTML folder, where WordPress has been installed.

Step 1

Open your FTP software and navigate to the root of the WordPress installation on the affected website.

Step 2

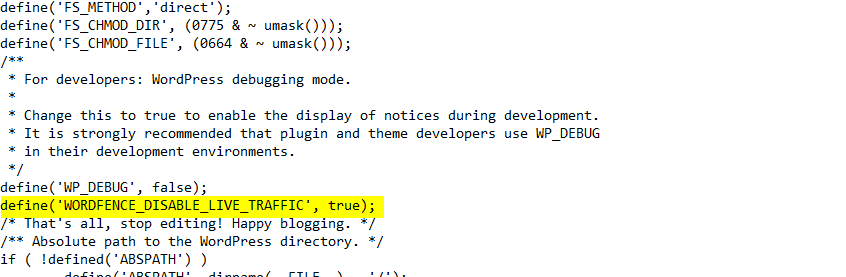

Make a copy of the file before you start making any edits, just so you have a fallback version incase you take your site down, and then with the original file you can add in the required line of code:define('WORDFENCE_DISABLE_LIVE_TRAFFIC', true);

Just make sure you add it before this line:/* That's all, stop editing! Happy blogging. */

Step 3

Re-upload the wp-config.php and keep your fingers crossed as you refresh the page, hoping to not have taken your site offline in the process 🙏.

Of course it won't be quick to find out if the problem is resolved - you might need to wait a few weeks to see if GSC still throws out those wordfence page URL's.

If you're not very patient you can also check your server logs from your host to see if you spot those URL's being generated and accessed by any crawlers. In theory these pages shouldn't be created anymore - so if you see them popping up after making this change then perhaps something isn't quite right.

Are there SEO issues caused by the Wordfence page URL's?

It shouldn't impact your website's SEO in any way - unless you are getting such a huge number of pages generated that it might be causing issues with Googlebot crawling through these and finding your good content. I think that is very unlikely to happen though.

There could be more cause for concern if you have an old theme or plugin which is causing the created URLs to contain content - instead of being just a blank page.

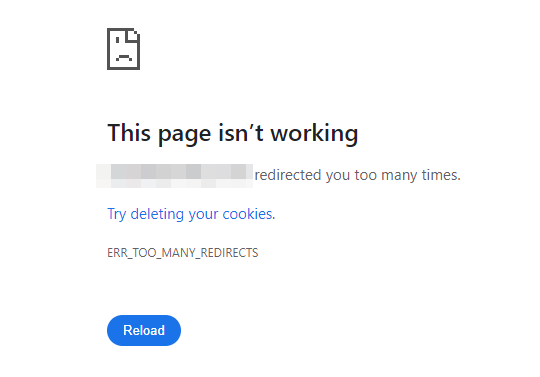

This issue might give Googlebot pause for thought (is this genuine content we should be indexing?) and I've read of others having this issue. There are also reports of these wordfence pages 301 redirecting back to the homepage of the site, as some kind of botched fix by theme developers, which can also be problematic.

A few users on the internet are reporting that Google was in fact indexing these Wordfence page URL's - but this was likely due to a custom theme issue, whereby instead of serving a blank page it instead loaded the homepage of the website or redirected to the homepage. To Googlebot this sometimes looked like actual content to index: not just a blank page.

My general SEO approach is to make Google's life easier, and to treat them as an idiot (sorry, Googlebot). So I'd rather avoid feeding them anything that may leave Googlebot scratching their head 🤖.

People experiencing this issue are recommended to try reverting to a default Wordress theme (eg Twenty Twenty), and to clear their caches - or to speak to their theme developers. One of the Wordfence team elaborated in a forum post:

"…Sometimes themes use the WordPress template redirect hook incorrectly which results in all requests with a query string end up serving the full contents of the page."

Wordfence plugin support team

If this was happening on one of my sites and Google was actually indexing them then yes I'd say that's going to be an SEO issue to resolve.

For me the main annoyance caused by this quirk of Wordfence is that it makes quick analysis of Search Console reports harder.

Right now I have a website that has got some fairly fresh new content in the form of blog posts - which Google seems to be ignoring (that old "Crawled not indexed" chestnut). But it's not easy for me to see the extent of this problem at a quick glance when they're reporting all of these irrelevant Wordfence URL's.

Update - Robots.txt edit to prevent crawling

After sharing the article on Twitter I had some great feedback - it was suggested that editing my robots.txt file to prevent crawlers accessing those parameter based URL's would be a good fix.

Great article, you could also disallow Google bot from crawling those pages by updating your robots.txt

— ₿rendan Sluke (@BrendanSluke) March 25, 2022

Google may still try to crawl the old links it already know exist, even if no new links of that type are generated after your update.

It was also confirmed by John Mueller of Google that it's unlikely to cause any SEO issues.

We don't do anything special for wordfence. URLs are URLs :-). If they lead nowhere, we sometimes learn and ignore them (but sometimes it takes long). Easiest is just blocking via robots.txt, eg

— 🐐 John 🐐 (@JohnMu) March 25, 2022

disallow: /*?parameter=

I doubt they would cause problems though, even crawled.

Asking John as to how Googlebot might find those URL's in the first place (I saw no internal references to them in my site code or elsewhere) he suggests the following:

Well, we wouldn't make them up :-). If they're used for live traffic stats, then I imagine they're dropped as an embed on the pages. You'd probably see them if you looked at the network tab in Chrome dev tools while loading a page like that.

— 🐐 John 🐐 (@JohnMu) March 25, 2022

Since making the edit to my site's WP-config.php file there has only been 1 reported URL within the "crawled, not indexed" report over a period of about 3 weeks - so I think it's done the job.

If I wanted to be sure to remove them I'd probably want to delete those pages and serve a 410 status code (gone - not returning) which, when next visited by a crawler, would ensure they're dropped. That should also ensure those pesky warnings/errors in Search Console get removed too.

Only then would I want to edit my robots.txt to prevent crawlers hitting those pages again, but IMO that would be overkill at this point.

If you want to hire a freelance SEO to help with things like the above, well, you know where to find me! 😉