Seeing an issue at the moment that appears specific to sites that are hosted using Siteground. Would be happy to be proven wrong, if it's not specific to them, but from all my tests it looks that way.

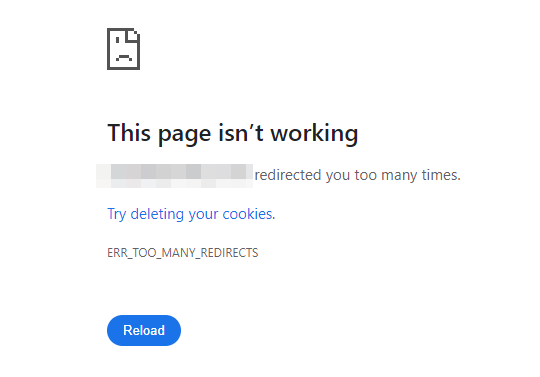

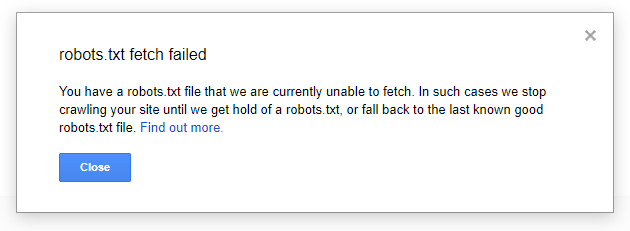

The issue I'm seeing is that Googlebot is unable to fetch the current robots.txt file of the site, and so are either not crawling the site, or are falling back to a previous version of the robots.txt file.

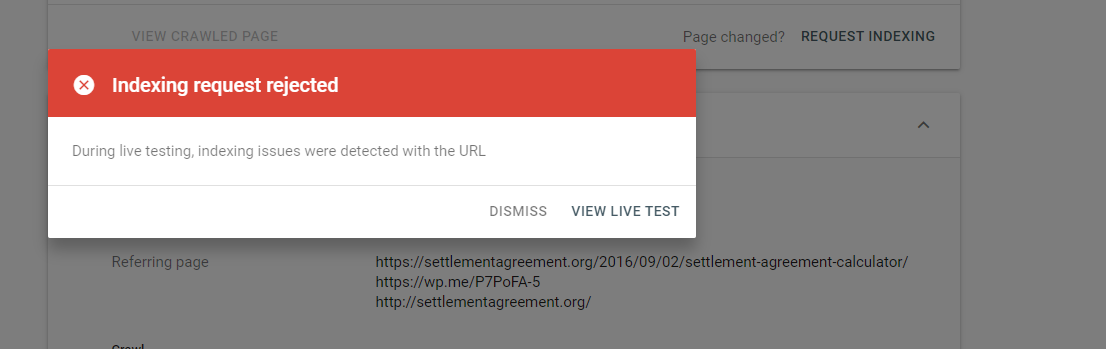

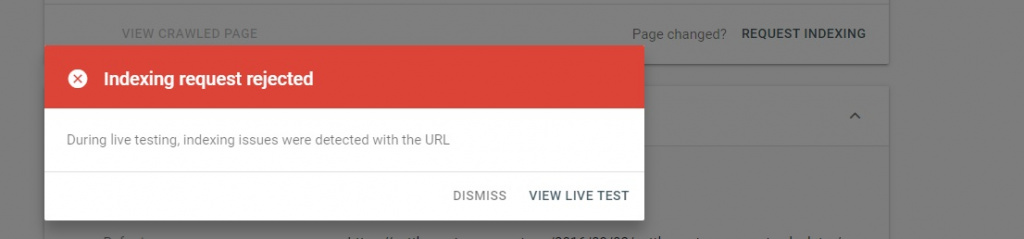

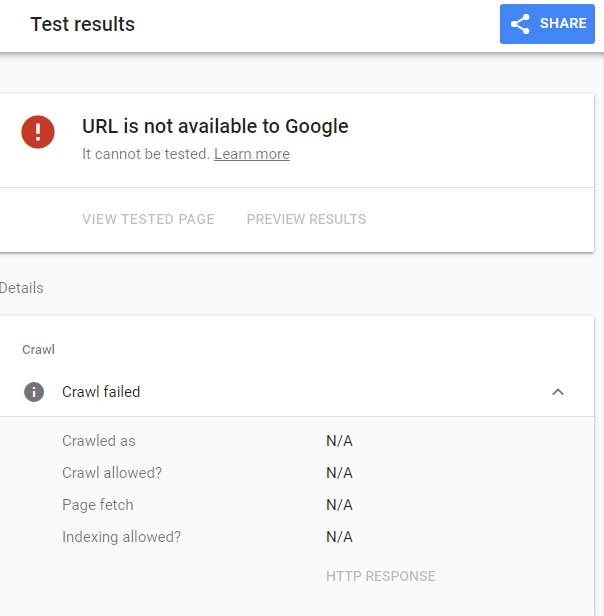

I noticed this yesterday on a client site I was building for them. The dev site I'd built was noindexed, I took those noindex tags off all pages, then went to use the URL inspection tool in search console and Google wasn't able to access the page.

At first I was convinced this was an issue with Google's tool. I also checked using the Rich Results tool, and the Mobile Friendly Tester, and each of these failed too.

I could crawl the site using Screaming Frog - no issues there either.

I then tested using Bing Webmaster Tools' Inspect URL feature, and Bing did get a 200 status code for that page, so I knew this issue was specific to Googlebot.

From some Twitter chat I noticed someone I followed mentioning an issue with their Sitemap, and that Google couldn't access it. They're also using Siteground (I asked them as it was a hunch I had).

I then put some of my sites through Google's legacy robots.txt tester tool, and eventually found the error message below.

I don't know if this is specific to a certain setup of sites on Siteground (maybe plugin/cache/location specific?) but I do notice very similar chatter on the Google webmaster forums - people having similar issues.

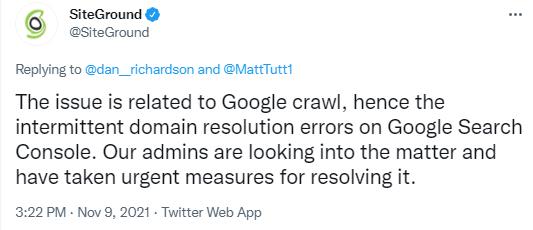

Update - SiteGround admitted there's an issue

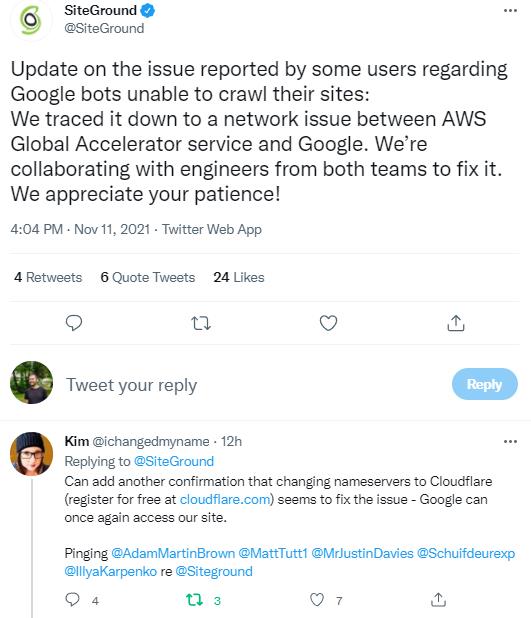

Nice to find out I haven't gone completely mad - they've announced that there have been issues with Googlebot and they're working to resolve them. Screenshot below is from the SiteGround twitter handle, and here's a link to my Twitter thread too.

Hopefully they'll resolve fairly soon - personally I spent a bit too long trying to figure out what could be wrong, on my client's site. Hate to imagine how much time everyone else has wasted chasing their tails!

Second Update - 11/11/2021 (07:30 AM - CET)

So another 24 has passed since SiteGround were alerted to this issue (I think it's actually even longer than this - I first noticed the problem on the 8th of November) and still the issues persist.

Since posting this on Twitter on Tuesday I've seen many people tweeting to share their own issues. One unexpected (but in hindsight, obvious) additional impact has been on people on the PPC side of things, trying to run Google product ads / Merchant Centre ads. Googlebot also crawls these heavily to check the product listings, and so if SiteGround isn't available to Google (for whatever reasons) then they will fail to reach those products and so the ads will fail/not run. This can get quite expensive for any clients, especially this time of year!

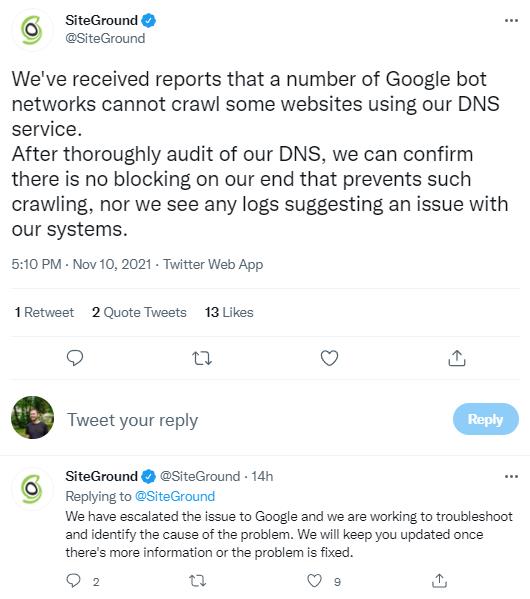

From a few of the support threads I've seen shared, and from SiteGround's own updates, I get the feeling that:

- SiteGround are claiming they are not at fault, and that Google are to blame.

- They've admitted that they use Google Cloud hosting service, so find it funny that Google can't access it's own "product" in this way.

- Have said they're working "all hands on deck" to resolve this. Which I think was the same stance as their original one! Aka not much has changed.

So it doesn't spark a lot of confidence when this is all going on and is being shared publicly. A few people on Twitter have announced they'll be looking to shift hosts - and I can't blame them!

SiteGround admitting the issue - and passing the blame (https://twitter.com/SiteGround/status/1458467145734660099)

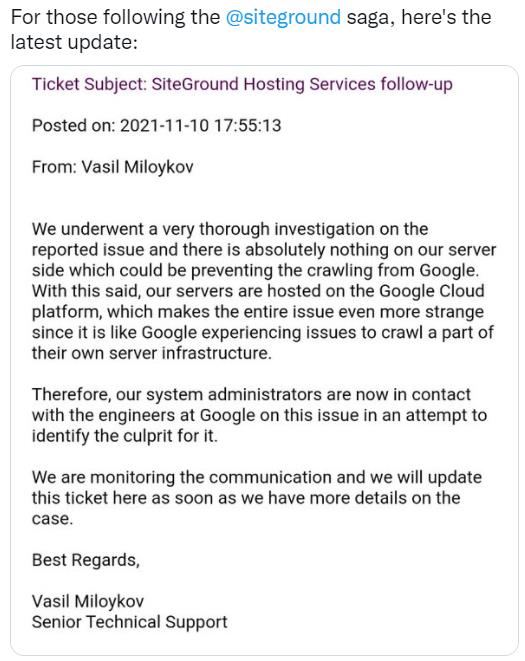

One positive from this has been seeing others sharing their own updates and helping to spread the issue - in a bid to try and rally SiteGround to sort it out. Twitter user Kim was helpful by sharing this update - where SiteGround claim to use Google Cloud.

This update kindly came via Kim on Twitter, another person who has been hit by this issue: https://twitter.com/ichangedmyname/status/1458594577292709889

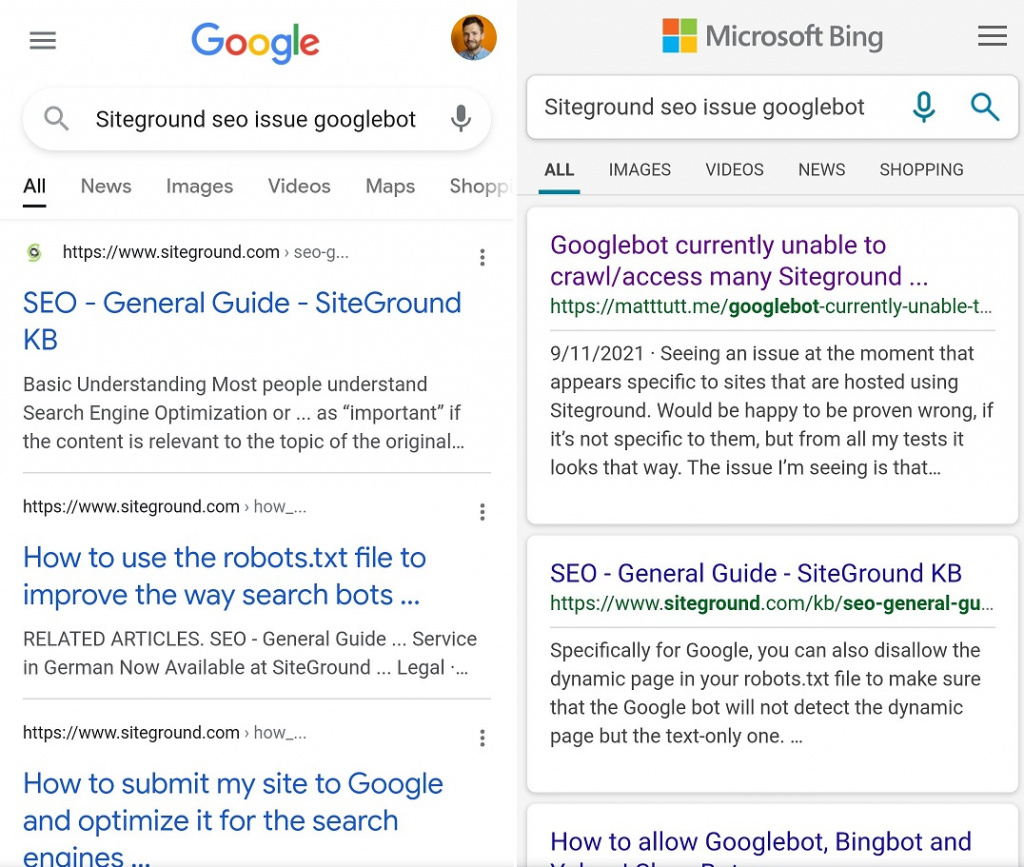

I took this nice set of screenshots to highlight the issue, using this exact article. As a side-note I did nothing here to "submit" the article to Bing via their Webmaster Tools (not even sure if I've submitted my XML sitemap with them) but regardless, they found/indexed it fairly swiftly.

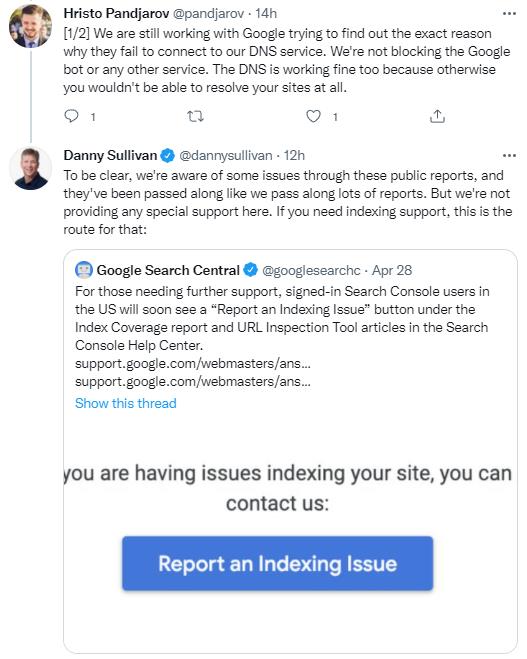

Finally - these updates from Danny Sullivan of Google and Hristo of SiteGround don't exactly fill me with confidence either:

An update from Google's Danny Sullivan, responding to Hristo (an employee of SiteGround) about the issue - https://twitter.com/dannysullivan/status/1458489019864543235

Latest update from Hristo - claiming to be waiting on Google - https://twitter.com/pandjarov/status/1458682601263276032

So - when will this be resolved? Sadly it doesn't appear to be anytime soon.

Third Update - 12/11/2021 (06:45 AM - CET)

As comical as this may seem: the SIteGround issue is still persisting! ????By some accounts this actually means sites have been unavailable to Google for 5 days (I've seen reports from Twitter that this was first flagged on Monday)!

How to fix the problem yourself (caveats apply)

I hadn't bothered with this initially as I'd assumed (silly me) that a company like SiteGround wouldn't have allowed such a big issue to have persisted. I was aware that using Cloudflare might have resolved this - simply as some sites that were already on Cloudflare (but are hosted at SiteGround) weren't impacted when this issue was first announced.

This was drilled home to me by various people on Twitter sharing how it worked for them - and was a quick and easy solution.

So - last night I setup a free Cloudflare account, and then changed my nameservers (from my domain registrar) to point to these Cloudflare nameservers, and within about 15 minutes the change had propagated!

I tested all was OK by running the Rich Results Test by Google - and I got a proper response to the crawl request. Success!

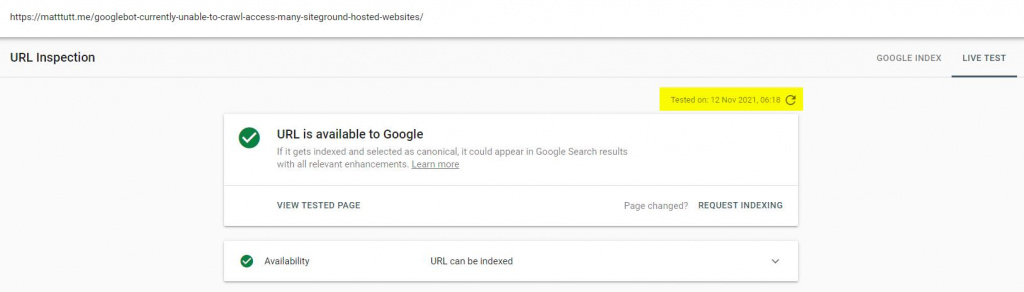

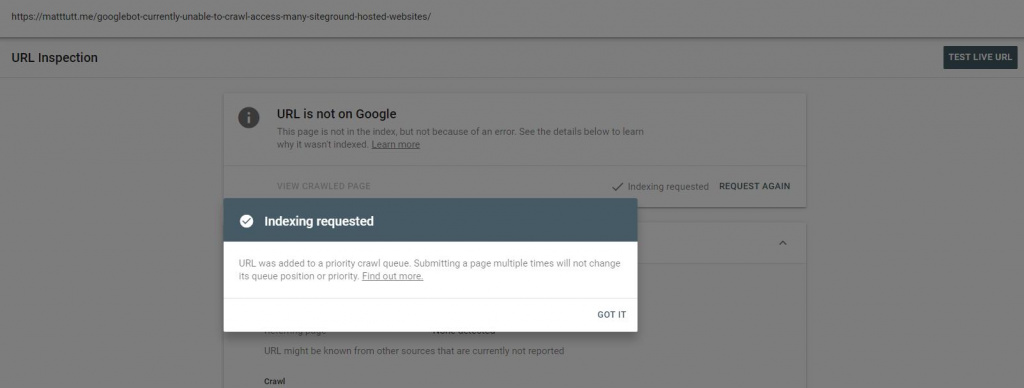

This morning I just ran URL Inspection tool from Search Console for this same site (this very blog post actually) and I can confirm the URL is available to Google.

This post hadn't been indexed yet, so I requested indexing too, and that was all accepted without problem - see below.

Summary of the fix using Cloudflare

- Open a free account with Cloudflare (note it seems important that this is a new account, or which hasn't been setup before with SiteGround).

- Add in one domain - I don't think you can add more on the free plan. Obviously it might be worth paying for, if this issue is costing your business (eg you've got important pages to index and/or are trying to run Google Ads).

- After going through the setup (which is very quick) take the nameservers given by Cloudflare and then go to your domain registrar (eg GoDaddy, 1&1, NameCheap, etc).

- Edit the nameservers there - just copy and paste the 2 given from Cloudflare. If you struggle where to find this, just try Googling "change nameservers {godaddy/namecheap/1&1}" and you should find a guide.

- After saving these nameservers you can then wait for the update. In my case it was 15 mins but it could be hours - I had a warning message that it could be 48 hours! Luckily it wasn't that bad.

- To test if things have changed, you can use the "Inspect URL" feature in Search Console (just remember to choose the "Live Test" option! or you can paste the site URL into the Rich Results Test, even Google's Mobile Friendly Test. Those should show whether Googlebot can reach the page without issue (before they were completely blocked).

- Lie down and have a rest! Maybe even celebrate by going outside for a walk ????

Why didn't SiteGround make it clear that this would be a good temporary fix from day one? Well.... no idea! It's not great that we have to make this workaround to fix their issue. But I know it would've solved a lot of issues, and would've been better than posting nonsense on Twitter like below....

As a side note - I spent a long time yesterday getting my bits together to leave SiteGround. It wasn't just a result of this whole issue - I haven't been a fan for a long time. I've also got a €500 renewal fee coming up with them for the next 12 months so this has been a timely reminder to sort myself out.

I've heard good things about Cloudways, but again it will depend on what you're looking for in a host. Personally I want one which isn't going to be inaccessible to Google for 5 days and counting... but maybe that's just me ????